Member-only story

PySpark — JDBC Predicate Pushdown

SQL Data sources are the well known data sources for transactional systems. Spark has out of the box capability to connect with SQL sources with help of Java Database Connectivity aka JDBC drivers and its as easier as reading from File data sources.

We are going to use the simplest SQLite database to check how can we optimize the performance of reads. Will also dig into explain plans to understand how Spark optimizes it for performance benefits.

So, without delay lets jump into action.

In order to read from SQLite database we would need to load the JBDC jar as library in our notebook. Now, this step is important.

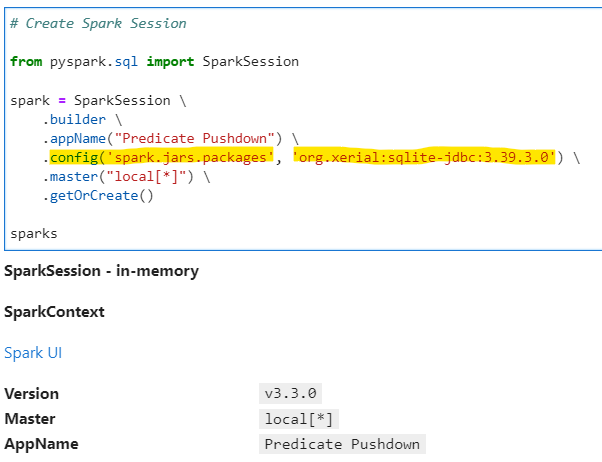

Checkout below how the SparkSession is created to import the jar as library on runtime in notebook.

# Create Spark Sessionfrom pyspark.sql import SparkSessionspark = SparkSession \

.builder \

.appName("Predicate Pushdown") \

.config('spark.jars.packages', 'org.xerial:sqlite-jdbc:3.39.3.0') \

.master("local[*]") \

.getOrCreate()spark

Now, since we imported the JDBC library successfully, lets create our Python decorator for performance measurement. We will use “noop” format for performance benchmarking

# Lets create a simple…